The phrase “superhuman vision” usually triggers some eye-rolling.

It’s been used for everything from camera phones to security software. Most of the time, it just means “slightly better than before.”

This time feels different.

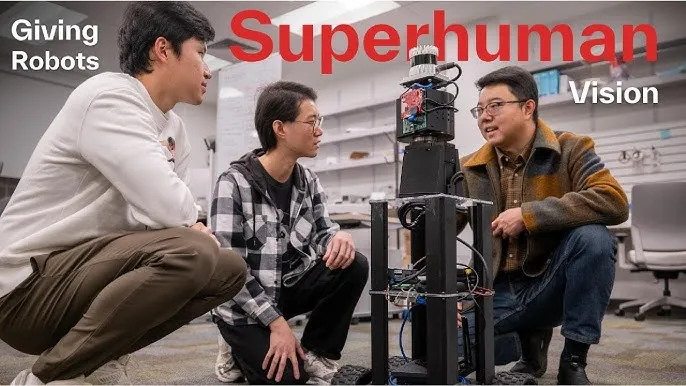

Researchers have unveiled a new robotic vision system that can detect, interpret, and react to visual information in ways humans simply can’t. Not faster reflexes. Not sharper eyesight. But a different kind of seeing altogether.

And yes, that distinction matters.

What makes this system unusual

Human vision is impressive, but it has limits. We miss things. We get tired. We struggle with low light, glare, or overwhelming visual noise.

This new system doesn’t have those problems.

It combines ultra-high-speed cameras, neural network processing, and sensor fusion to analyze scenes at a level of detail that goes beyond human perception. That includes spotting micro-movements, subtle material changes, and patterns invisible to the naked eye.

In lab demonstrations, the system can track fast-moving objects that blur into nothing for humans. It can detect tiny cracks forming in materials before they become visible. It can distinguish between visually similar objects based on texture shifts most people wouldn’t notice.

That’s where things get interesting.

This is not about replacing human eyes. It’s about giving machines a way to see the world differently.

Speed is part of it, but not the whole story

A lot of robotic vision systems focus on speed. Faster frame rates. Lower latency. Quick reactions.

This one does that, but it also focuses on understanding.

The system doesn’t just record images. It interprets context. It evaluates motion, depth, and material properties simultaneously. That allows robots to make decisions based on what is likely to happen next, not just what is happening now.

Early signs suggest this reduces errors in unpredictable environments. A robot can anticipate a falling object. It can adjust grip pressure before something slips. It can recognize when a surface is unstable rather than finding out the hard way.

This part matters more than it sounds.

Most real-world environments are messy. Vision systems that only work in controlled settings hit a wall very quickly.

Why researchers are excited, but cautious

Calling something “superhuman” invites scrutiny. And the researchers involved seem aware of that.

They’re careful to say the system excels in specific tasks, not general perception. It doesn’t understand meaning the way humans do. It doesn’t recognize emotions. It doesn’t replace judgment.

What it does is measure and process visual data with extreme precision.

That precision could be transformative in the right contexts.

Manufacturing is an obvious one. Spotting defects earlier. Improving quality control. Reducing waste. The same applies to infrastructure inspection, where detecting microscopic changes can prevent major failures.

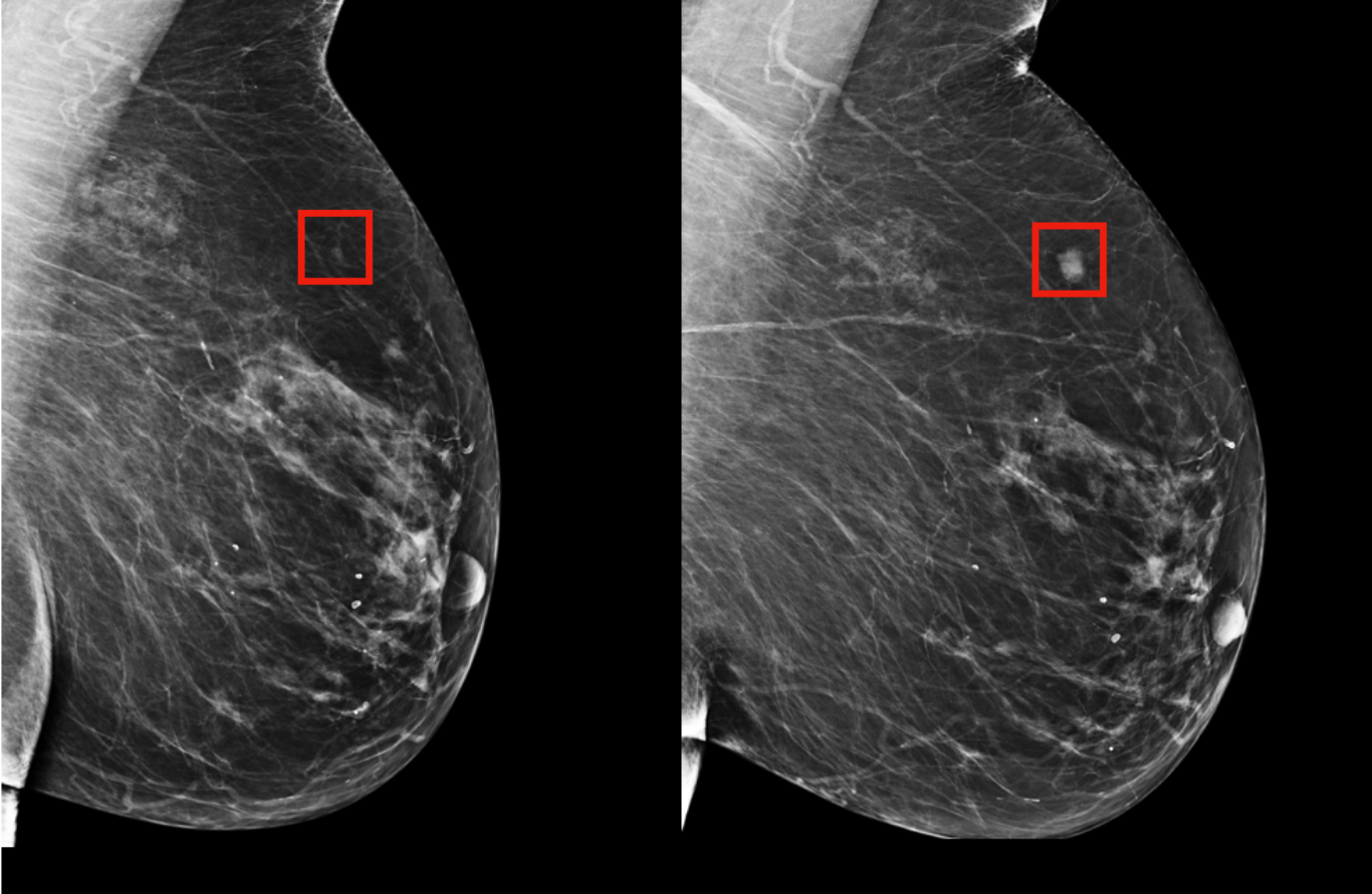

Healthcare applications are being discussed too, though those remain early-stage. Surgical robotics, imaging assistance, and lab automation could benefit, but clinical deployment will take time.

And regulation.

The data challenge nobody likes talking about

One reason this system hasn’t appeared sooner is data.

Training vision models at this level requires massive datasets. Not just images, but annotated sequences showing how materials behave under stress, motion, or change. Collecting that data is slow and expensive.

The researchers say they used a mix of real-world recordings and synthetic data to bridge the gap. That approach is becoming more common, but it also raises questions about generalization.

Will the system perform as well outside the lab? In dusty factories. In rain. In poor lighting.

Early tests suggest it handles variation better than previous systems, but real-world validation is still ongoing.

That uncertainty is important to acknowledge.

What this could mean for robotics more broadly

Robotics has often struggled with perception. Movement has improved. Control systems are better. But seeing and understanding the environment remains one of the hardest problems.

If this vision system scales, it could unlock more autonomy. Robots that don’t need perfectly structured environments. Machines that adapt rather than pause and wait for instructions.

That shifts how humans interact with machines.

Instead of supervising every step, people set goals and monitor outcomes. The robot handles the visual complexity in between.

That’s a big shift. And not everyone is ready for it.

There are limits, and they’re not hiding them

Despite the headlines, this is not a general-purpose robot brain.

The system requires significant computing power. Energy use is non-trivial. Cost will be a barrier, at least initially. And integration with existing robots won’t be seamless.

Researchers openly say this is a platform, not a finished product.

It will likely appear first in high-value industrial settings. Places where failure is expensive and precision pays for itself.

Wider adoption will depend on cost reductions and further refinement.

What happens next

Over the next year, expect pilot deployments. Controlled rollouts. Lots of benchmarking against human performance and older systems.

Some claims will hold up. Others may soften.

That’s normal.

What feels different here is the direction. Instead of trying to mimic human vision, researchers are leaning into what machines do better.

Seeing more. Seeing faster. Seeing patterns we can’t.

Whether that turns into everyday technology or remains a specialized tool depends on what happens outside the lab.

But for now, robotic vision has taken a noticeable step forward.

And it’s one worth paying attention to.