For years, Stack Overflow was the place developers went when they got stuck. Not blogs. Not docs. Definitely not forums. You pasted your error, scrolled past a bit of sarcasm, and somewhere down the page was the answer that actually worked.

That habit is breaking.

Not overnight. Not dramatically. But steadily enough that even people inside the developer community are starting to admit it feels different now.

The rise of AI models like ChatGPT and Claude is changing how developers search for answers. And Stack Overflow is right in the blast zone.

The shift didn’t start with layoffs or policy changes

It started with convenience.

Instead of opening a browser, typing a question, skimming five tabs, and hoping one answer applies to your setup, developers can now just ask an AI. In plain language. With context. Sometimes with their actual code pasted in.

And they get an answer instantly.

Not always perfect. Not always correct. But often good enough to move forward.

That’s the key part people underestimate. Developers are not always looking for the “best” answer. They’re often looking for the fastest unblock.

ChatGPT, Claude, and similar tools fit that moment uncomfortably well.

Stack Overflow’s model was always a little fragile

To be fair, Stack Overflow’s system worked because of strong incentives and strict norms.

Ask a bad question, you got downvoted. Duplicate question, closed. Miss a detail, someone would tell you. Sometimes rudely.

That friction was part of what kept quality high. But it also scared people off, especially beginners.

AI doesn’t judge. It doesn’t say “this was answered in 2014.” It doesn’t demand a minimal reproducible example before it responds. You ask, it tries.

That difference matters more than it sounds.

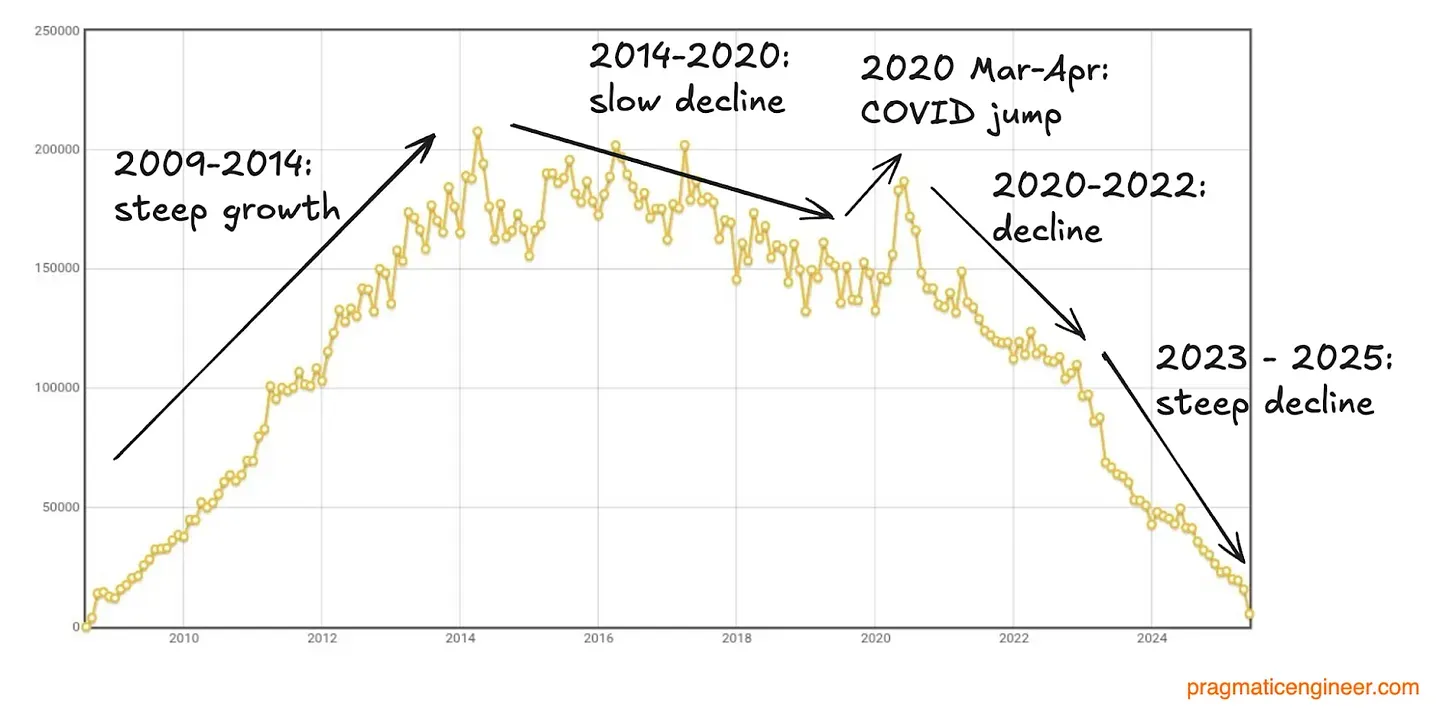

Traffic numbers tell part of the story

Stack Overflow has acknowledged declining traffic over the last couple of years. The company hasn’t blamed AI directly, but the timing is hard to ignore.

Developers are still coding more than ever. They just aren’t Googling errors the same way.

Instead of:

“TypeError cannot read property undefined site:stackoverflow.com”

It’s:

“Why does this JavaScript function break when I pass null?”

And that conversation happens inside an AI chat window, not a browser.

Once habits shift, they rarely snap back.

AI answers feel personal, even when they’re wrong

One reason these models are so sticky is that they respond like a colleague sitting next to you.

They explain things in your words. They adapt if you say “no, that’s not it.” They remember context within the conversation.

Stack Overflow answers are frozen in time. They’re often written for someone else, with a different setup, five years ago.

That’s not Stack Overflow’s fault. It’s just how forums work.

AI feels alive. Even when it’s hallucinating a bit.

And yes, hallucinations are still a problem. Ask any senior engineer and they’ll tell you they don’t blindly trust AI output. They verify. They double-check.

But here’s the uncomfortable part. A wrong AI answer that gets you 80 percent there can still be more useful than a perfect forum answer you never find.

Contribution is dropping, not just consumption

Another quiet change is who is still answering questions.

If experienced developers are solving their own problems with AI, they’re less likely to hang around answering basic questions on forums. That reduces fresh, high-quality answers over time.

It becomes a feedback loop.

Less traffic leads to fewer contributors. Fewer contributors means slower responses. Slower responses push more users toward AI.

None of this happens in a single announcement. It just… happens.

Stack Overflow isn’t dead. But it’s no longer the first stop

This part gets misunderstood a lot.

Stack Overflow still matters. Its archive is massive. AI models themselves were trained on its content. When ChatGPT explains a bug, odds are the underlying knowledge came from a Stack Overflow post written years ago by a human.

But the role has changed.

Stack Overflow is becoming a reference library rather than a live help desk. A place AI pulls from, not where developers actively show up first.

That’s a big identity shift for a platform built on community interaction.

The company knows this, and it’s trying to adapt

Stack Overflow has experimented with AI features of its own. AI-assisted answers, partnerships, changes to moderation tone. There’s an awareness that the old model cannot stay untouched.

But adapting a culture is harder than adding a feature.

The platform’s strength was its strictness. AI thrives on flexibility. Blending those two philosophies is not simple.

What developers should realistically expect next

In the short term, expect more AI-first workflows. IDEs with built-in assistants. Debugging via chat. Documentation that reads more like conversation.

Stack Overflow will likely remain relevant for edge cases, deep explanations, and long-lived knowledge. Especially when accuracy really matters.

But the days of every developer tabbing over to Stack Overflow multiple times a day? That era is probably over.

Not because Stack Overflow failed.

Because the way we ask questions has changed.

And once people get used to talking to their tools instead of searching them, it’s hard to go back.